Hi. The following is an excerpt from Tim’s new book What’s Our Problem? A Self-Help Book for Societies. This is a sample from the book’s first chapter. You can buy the ebook or audiobook or get the Wait But Why version to read the whole thing right here.

Chapter 1:

The Ladder

There is a great deal of human nature in people.

– Mark Twain

The Tug-of-War in Our Heads

The animal world is a stressful place to be.

The issue is that the animal world isn’t really an animal world—it’s a world of trillions of strands of genetic information, each one hell-bent on immortality. Most gene strands don’t last very long, and those still on Earth today are the miracle outliers, such incredible survival specialists that they’re hundreds of millions of years old and counting.

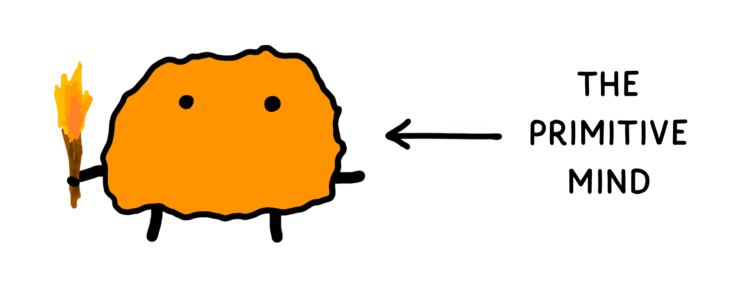

Animals are just a hack these outlier genes came up with—temporary containers designed to carry the genes and help them stay immortal. Genes can’t talk to their animals, so they control them by having them run on specialized survival software I call the Primitive Mind:

The Primitive Mind is a set of coded instructions for how to be a successful animal in the animal’s natural habitat. The coder is natural selection, which develops the software using a pretty simple process: Software that’s good at making its animal pass on its genes stays around, and the less successful software is discontinued. Genetic mutation is like a bug appearing in the software from time to time, and every once in a while, a certain bug makes the software better—an accidental software update. It’s a slow way to code, but over millions of generations, it gets the job done.

The infrequency of these updates means an animal’s software is actually optimized for the environment of its ancestors.1 For most animals, this system works fine. Their environment changes so slowly that whatever worked a hundred thousand or even a million years in the past probably works just about as well in the present.

But humans are strange animals. A handful of cognitive superpowers, like symbolic language, abstract thinking, complex social relationships, and long-term planning, have allowed humans to take their environment into their own hands in a way no other animal can. In the blink of an eye—around 12,000 years, or 500 generations—humans have crafted a totally novel environment for themselves called civilization.

As great as civilization may be, 500 generations isn’t enough time for evolution to take a shit. So now we’re all here living in this fancy new habitat, using brain software optimized to our old habitat.

You know how moths inanely fly toward light and you’re not really sure why they do this or what their angle is? It turns out that for millions of years, moths have used moonlight as a beacon for nocturnal navigation—which works great until a bunch of people start turning lights on at night that aren’t the moon. The moth’s brain software hasn’t had time to update itself to the new situation, and now millions of moths are wasting their lives flapping around streetlights.

In a lot of ways, modern humans are like modern moths, running on a well-intentioned Primitive Mind that’s constantly misinterpreting the weird world we’ve built for ourselves.

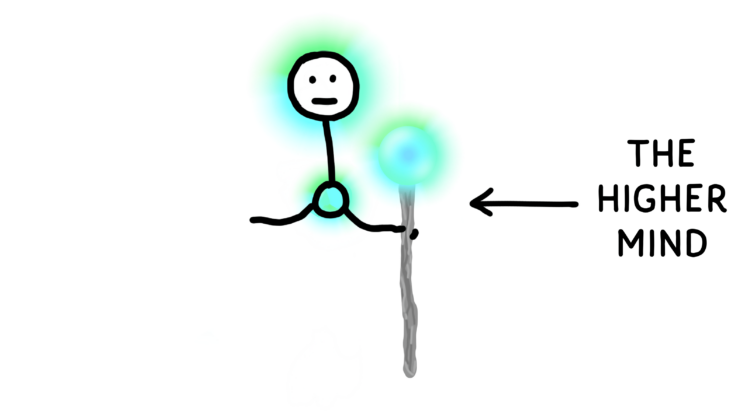

The good news is, our Primitive Mind has a roommate: the Higher Mind.

The Higher Mind is the part of you that can think outside itself and self-reflect and get wiser with experience.

Unlike the Primitive Mind, the Higher Mind can look around and see the world for what it really is. It can see that you live in an advanced civilization and it wants to think and behave accordingly.

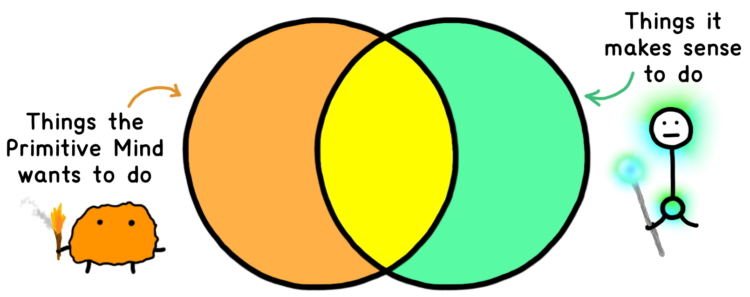

The Primitive Mind and Higher Mind are a funny pair. When things are going well, the inside of your head looks like this:

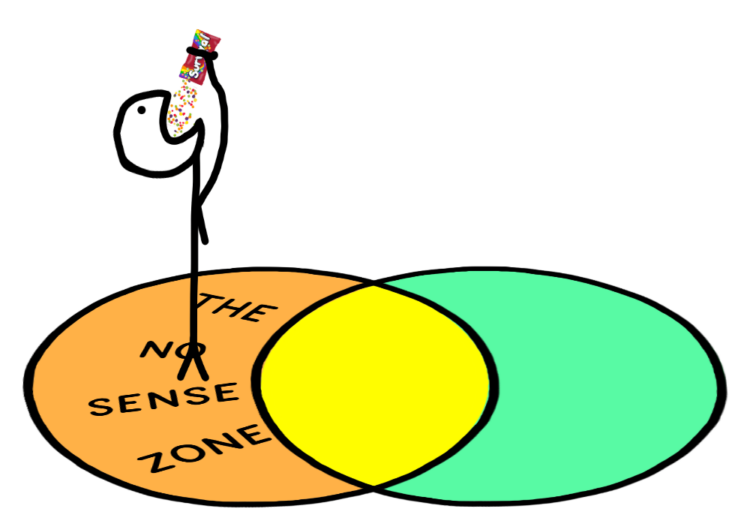

The Higher Mind is large and in charge, while its little software pet chases dopamine around, taking care of the eating and sleeping and masturbating. The Primitive Mind, at its core, just wants to survive and reproduce and help its offspring reproduce—all things the Higher Mind is totally on board with when it makes sense. When the Primitive Mind wants you to think and behave in a way that doesn’t map onto reality, the Higher Mind tries to override the software, keeping you within the “makes sense” circle on the right:

The trouble starts when the balance of power changes.

As smart as the Higher Mind may be, it’s not very good at managing the Primitive Mind. And when the Primitive Mind gains too much control, you might find yourself drifting over to the No Sense Zone.

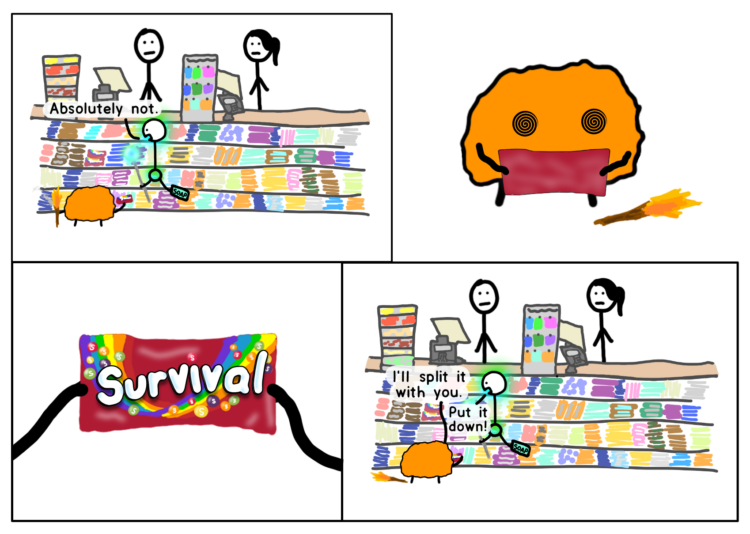

Like we’ve all been here, trying to buy something at a drugstore and becoming enticed by a succulent bag of junk food.

The Primitive Mind and Higher Mind help us see what’s really going on:

Like the moth flying toward a streetlight, the human Primitive Mind thinks it’s a great decision to eat Skittles. In the ancient human world, there was no such thing as processed food, calories were hard to come by, and anything with a texture and taste as delectable as a Skittle was surely a good thing to eat. Mars, Inc., which makes Skittles, knows what makes your Primitive Mind tick and is in the business of tricking it. Your Higher Mind knows better. If it holds the reins of your mind, it’ll either skip the Skittles or have just a few, as a little treat for its primitive roommate.

But sometimes, there you are, 80 Skittles into your binge, hating yourself—because your Primitive Mind has hijacked the cockpit.

This kind of internal disagreement pops up in many parts of life, like a constant tug-of-war in our heads—a tug-of-war over our thoughts, our emotions, our values, our morals, our judgments, and our overall consciousness.

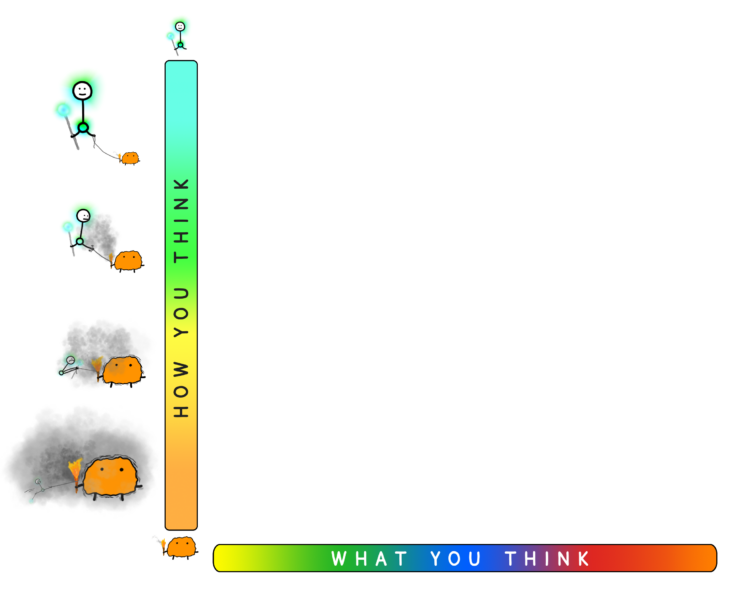

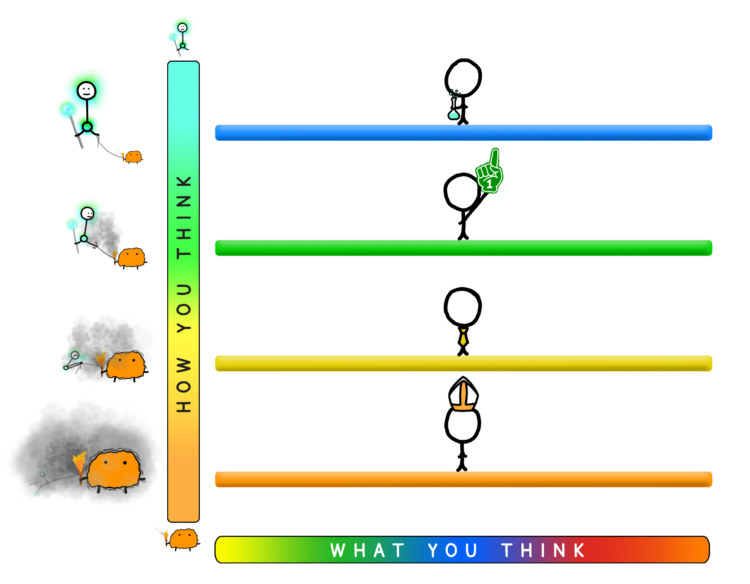

The tug-of-war is a spectrum that we can simplify into four basic states—or four rungs of a Ladder:

While the Higher Mind and Primitive Mind in this book overlap with elements of some of these other models, it is not meant to map perfectly onto any of them. Our characters and the tug-of-war between them will become intuitive as you read this book.

Humans are so complicated because we’re all a mixture of both “high-rung” and “low-rung” psychology.

When the Higher Mind is winning the tug-of-war, its staff illuminates our minds with clarity, including awareness of the Primitive Mind and what it’s up to. The Higher Mind understands that primitive pleasures like sex, food, and all-in-good-fun tribalism like sports fandom are enjoyable, and often necessary, parts of a human life. And like a good pet owner, the Higher Mind is more than happy to let the Primitive Mind have its fun. Primitive bliss is great, as long as it’s managed by the Higher Mind, who makes sure it’s done in moderation, it’s done for the right reasons, and no one gets hurt. In short, when we’re up on the high rungs, we act like grown-ups.

But when something riles up the Primitive Mind, it gets bigger and stronger. Its torch—which bears the primal fires of our genes’ will for survival—grows as well, filling our minds with smoky fog. This fog dulls our consciousness, so when we’re most under the spell of our Primitive Mind, we don’t even realize it’s happening. The Higher Mind, unable to think clearly, begins directing its efforts toward supporting whatever the Primitive Mind wants to do, whether it makes sense or not. When we slip down to the Ladder’s low rungs, we’re short-sighted and small-minded, thinking and acting with our pettiest emotions. We’re low on self-awareness and high on hypocrisy. We’re our worst selves.

We all have our own Ladder struggles. Some of us struggle with procrastination,2 an uncontrollable temper, or an addiction to sugar or gambling; others suffer from an irrational fear of failure or crippling social anxiety. We all self-defeat in our own way—in each case because our Higher Minds lose control of our heads and send us flapping our moth wings toward the streetlights.

In this book, we’re going to explore a particular group of Ladder struggles—those I believe are most relevant to our big question about today’s societies: What’s our problem? The first stop on our journey will be our own heads, where we’ll use the Ladder to help us make sense of a key process: how we form our beliefs.

Vertical Thinking

Why do we believe what we believe?

Our beliefs make up our perception of reality, drive our behavior, and shape our life stories. History happened the way it did because of what people believed in the past, and what we believe today will write the story of our future. So it seems like an important question to ask: How do we actually come to believe the things we end up believing?

To explore this question, let’s create a way to visualize it.

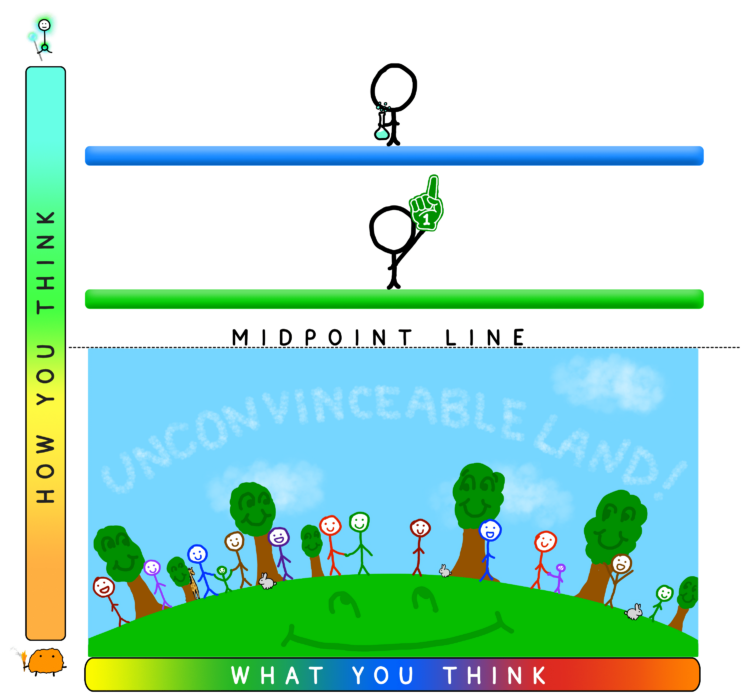

When it comes to our beliefs, let’s imagine the range of views on any given topic as an axis we can call the Idea Spectrum.

The Idea Spectrum is a simple tool we can use to capture the range of what a person might think about any given topic—their beliefs, their opinions, their stances.3

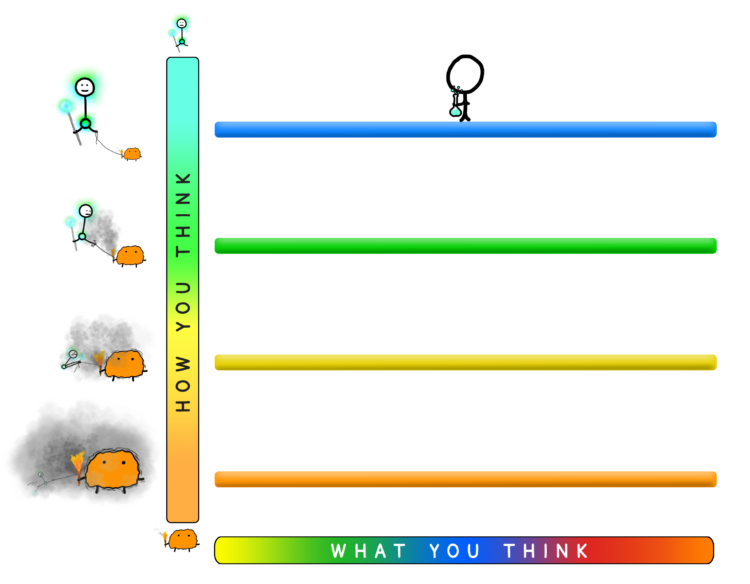

For most beliefs, we’re so concerned with where people stand that we often forget the most important thing about what someone thinks: how they arrived at what they think. This is where the Ladder can help. If the Idea Spectrum is a “what you think” axis, we can use the Ladder as a “how you think” axis.

To understand how our thinking changes depending on where we are on the Ladder, we have to ask ourselves: how do the two minds like to form beliefs?

Your Higher Mind is aware that humans are often delusional, and it wants you to be not delusional. It sees beliefs as the most recent draft of a work in progress, and as it lives more and learns more, the Higher Mind is always happy to make a revision. Because when beliefs are revised, it’s a signal of progress—of becoming less ignorant, less foolish, less wrong.

Your Primitive Mind disagrees. For your genes, what’s important is holding beliefs that generate the best kinds of survival behavior—whether or not those beliefs are actually true.1 The Primitive Mind’s beliefs are usually installed early on in life, often based on the prevailing beliefs of your family, peer group, or broader community. The Primitive Mind sees those beliefs as a fundamental part of your identity and a key to remaining in good standing with the community around you. Given all of this, the last thing the Primitive Mind wants is for you to feel humble about your beliefs or interested in revising them. It wants you to treat your beliefs as sacred objects and believe them with conviction.

So the Higher Mind’s goal is to get to the truth, while the Primitive Mind’s goal is confirmation of its existing beliefs. These two very different types of intellectual motivation exist simultaneously in our heads. This means that our driving intellectual motivation—and, in turn, our thinking process—varies depending on where we are on the Ladder at any given moment.

In the realm of thinking, then, the Ladder’s four rungs correspond to four ways of forming beliefs. When your Higher Mind is running the show, you’re up on the top rung, thinking like a Scientist.

Rung 1: Thinking like a Scientist

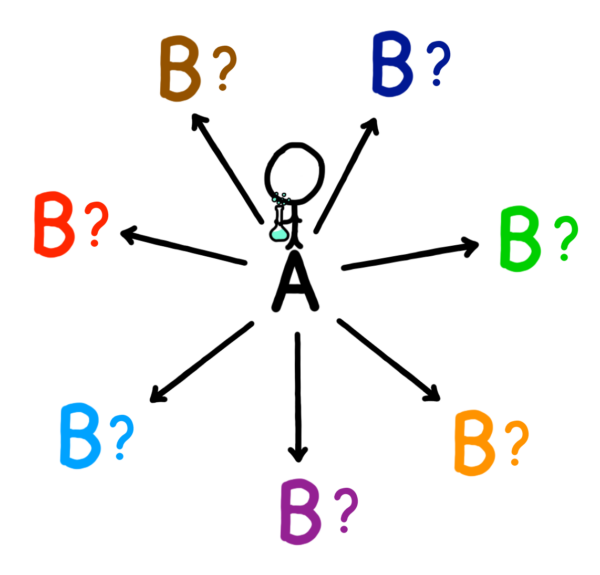

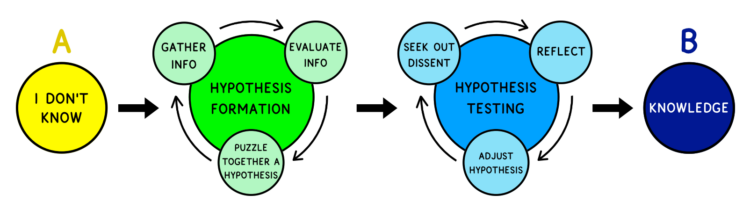

When you’re thinking like a Scientist,4 you start at Point A and follow evidence wherever it takes you.

More specifically, the Scientist’s journey from A to B looks something like this:

The Scientist’s default position on any topic is “I don’t know.” To advance beyond Point A, they have to put in effort, starting with the first stage: hypothesis formation.

Hypothesis formation

Top-rung thinking forms hypotheses from the bottom up. Rather than adopt the beliefs and assumptions of conventional wisdom, you puzzle together your own ideas, from scratch. This is a three-part process:

1) Gather information

In order to puzzle, you need pieces. Each of us is constantly flooded with information, and we have severely limited attention to allot. In other words, your mind is an exclusive VIP-only club with a tough bouncer.

But when Scientists want to learn something new, they try to soak up a wide variety of information on the topic. The Scientist seeks out ideas across the Idea Spectrum, even those that seem likely to be wrong—because knowing the range of viewpoints that exist about the topic is a key facet of understanding the topic.

2) Evaluate information

If gathering info is about quantity, evaluating info is all about quality.

There are instances when a thinker has the time and the means to collect information and evidence directly—with their own primary observations, or by conducting their own studies. But most of the info we use to inform ourselves is indirect knowledge: knowledge accumulated by others that we import into our minds and adopt as our own. Every statistic you come across, everything you read in a textbook, everything you learn from parents or teachers, everything you see or read in the news or on social media, every tenet of conventional wisdom—it’s all indirect knowledge.

That’s why perhaps the most important skill of a skilled thinker is knowing when to trust.

Trust, when assigned wisely, is an efficient knowledge-acquisition trick. If you can trust a person who actually speaks the truth, you can take the knowledge that person worked hard for—either through primary research or indirectly, using their own diligent trust criteria—and “photocopy” it into your own brain. This magical intellectual corner-cutting tool has allowed humanity to accumulate so much collective knowledge over the past 10,000 years that a species of primates can now understand the origins of the universe.

But trust assigned wrongly has the opposite effect. When people trust information to be true that isn’t, they end up with the illusion of knowledge—which is worse than having no knowledge at all.

So skilled thinkers work hard to master the art of skepticism. A thinker who believes everything they hear is too gullible, and their beliefs become packed with a jumble of falsehoods, misconceptions, and contradictions. Someone who trusts no one is overly cynical, even paranoid, and limited to gaining new information only by direct experience. Neither of these fosters much learning.

The Scientist’s default skepticism position would be somewhere in between, with a filter just tight enough to consistently identify and weed out bullshit, just open enough to let in the truth. As they become familiar with certain information sources—friends, media brands, articles, books—the Scientist evaluates the sources based on how accurate they’ve proven to be in the past. For sources known to be obsessed with accuracy, the Scientist loosens up the trust filter. When the Scientist catches a source putting out inaccurate or biased ideas, they tighten up the filter and take future information with a grain of salt.

When enough information puzzle pieces have been collected, the third stage of the process begins.

3) Puzzle together a hypothesis

The gathering and evaluating phases rely heavily on the learnings of others, but for the Scientist, the final puzzle is mostly a work of independent reasoning. When it’s time to form an opinion, their head becomes a wide-open creative laboratory.

Scientists, so rigid about their high-up position on the vertical How You Think axis, start out totally agnostic about their horizontal position on the What You Think axis. Early on in the puzzling process, they treat the Idea Spectrum like a skating rink, happily gliding back and forth as they explore different possible viewpoints.

As the gathering and evaluating processes continue, the Scientist grows more confident in their puzzling. Eventually, they begin to settle on a portion of the Idea Spectrum where they suspect the truth may lie. Their puzzle is finally taking shape—they have begun to form a hypothesis.

Hypothesis testing

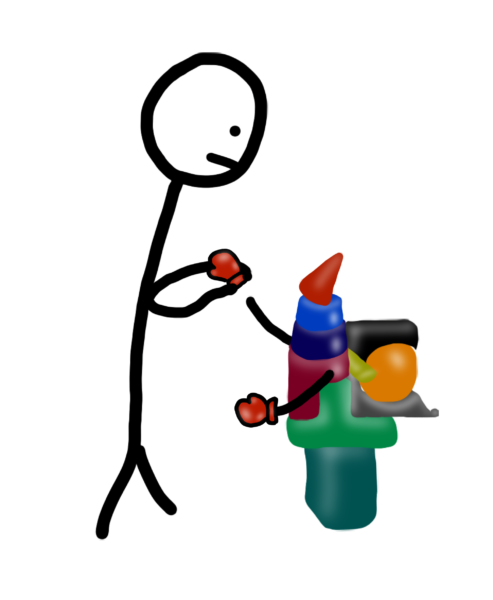

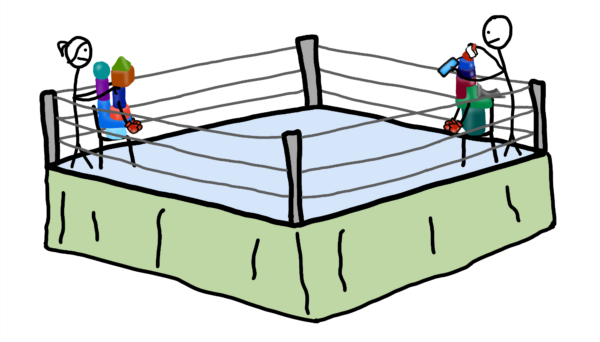

Imagine I present to you this boxer, and we have this exchange:

You’d think I was insane.

But people do this with ideas all the time. They feel sure they’re right about an opinion they’ve never had to defend—an opinion that has never stepped into the ring. Scientists know that an untested belief is only a hypothesis—a boxer with potential, but not a champion of anything.

So the Scientist starts expressing the idea publicly, in person and online. It’s time to see if the little guy can box.

In the world of ideas, boxing opponents come in the form of dissent. When the Scientist starts throwing ideas out into the world, the punches pour in.

Biased reasoning, oversimplification, logical fallacies, and questionable statistics are the weak spots that feisty dissenters look for, and every effective blow landed on the hypothesis helps the Scientist improve their ideas. This is why Scientists actively seek out dissent. As organizational psychologist Adam Grant puts it in his book Think Again:

I’ve noticed a paradox in great scientists and superforecasters: the reason they’re so comfortable being wrong is that they’re terrified of being wrong. What sets them apart is the time horizon. They’re determined to reach the correct answer in the long run, and they know that means they have to be open to stumbling, backtracking, and rerouting in the short run. They shun rose-colored glasses in favor of a sturdy mirror.

The more boxing matches the Scientist puts their hypothesis through, the more they’re able to explore the edges of their conclusions and tweak their ideas into crisper and more confident beliefs.

With some serious testing and a bunch of refinements under their belt, the Scientist may begin to feel that they have arrived at Point B: knowledge.

It’s a long road to knowledge for the Scientist because truth is hard. It’s why Scientists say “I don’t know” so often. It’s why, even after getting to Point B in the learning process, the Scientist applies a little asterisk, knowing that all beliefs are subject to being proven wrong by changing times or new evidence. Thinking like a Scientist isn’t about knowing a lot, it’s about being aware of what you do and don’t know—about staying close to this dotted line as you learn:

When you’re thinking like a Scientist—self-aware, free of bias, unattached to any particular ideas, motivated entirely by truth and continually willing to revise your beliefs—your brain is a hyper-efficient learning machine.

But the thing is—it’s hard to think like a Scientist, and most of us are bad at it most of the time. When your Primitive Mind wakes up and enters the scene, it’s very easy to drift down to the second rung of our Ladder—a place where your thinking is caught up in the tug-of-war.

Rung 2: Thinking like a Sports Fan

Most real-life sports fans want the games they watch to be played fairly. They don’t want corrupt referees, even if it helps their team win. They place immense value on the integrity of the process itself. It’s just…that they really, really want that process to yield a certain outcome. They’re not just watching the game—they’re rooting.

When your Primitive Mind infiltrates your reasoning process, you start thinking the same way. You still believe you’re starting at Point A, and you still want Point B to be the truth. But you’re not exactly objective about it.

Weird things happen to your thinking when the drive for truth is infected by some ulterior motive. Psychologists call it “motivated reasoning.” I like to think of it as Reasoning While Motivated—the thinking equivalent of drunk driving. As the 6th century Chinese Zen master Seng-ts’an explains:2

If you want the truth to stand clear before you, never be for or against. The struggle between “for” and “against” is the mind’s worst disease.

When you’re thinking like a Sports Fan, Seng-ts’an and his apostrophe and his hyphen are all mad at you, because they know what they’re about to see—the Scientist’s rigorous thinking process corrupted by the truth-seeker’s most treacherous obstacle:

Confirmation bias.

Confirmation bias is the invisible hand of the Primitive Mind that tries to push you toward confirming your existing beliefs and pull you away from changing your mind.

You still gather information, but you may cherry-pick sources that seem to support your ideas. With the Primitive Mind affecting your emotions, it just feels good to have your views confirmed, while hearing dissent feels irritating.

You still evaluate information, but instead of defaulting to the trust filter’s middle setting, you find yourself flip-flopping on either side of it, depending less on the proven track record of the source than on how much the source seems to agree with you:3

So the puzzle pieces collected in the Sports Fan’s head are skewed toward confirming a certain belief, and this is then compounded by a corrupted puzzling process. Compelling dissent that does make it into a Sports Fan’s head is often forgotten about and left out of the final puzzle.

When it’s time to test the hypothesis, the Sports Fan’s bias again rears its head. If you were thinking like a Scientist, you’d feel very little attachment to your hypothesis. But now you watch your little machine box as a fan, wearing its jersey. It’s Your Guy in the ring. And if it wins an argument, you might even catch yourself thinking, “We won!”

When a good punch is landed on your hypothesis, you’re likely to see it as a cheap shot or a lucky swing or something else that’s not really legit. And when your hypothesis lands a punch, you may have a tendency to overrate the magnitude of the blow or the high level of skill it involved.

Being biased skews your assessment of other people’s thinking too. You believe you’re unbiased, so someone actually being neutral appears to you to be biased in the other direction, while someone who shares your bias appears to be neutral.4

As this process wears on, it’s no surprise that the Sports Fan often ends up just where they were hoping to—at their preferred Point B.

On this second rung of the Ladder, the hyper-optimized learning machine that is the Scientist’s brain has become hampered by a corrupting motivation. But despite learning less than the Scientist, the Sports Fan usually feels a little more confident about their beliefs.

Sports Fans are stubborn, but they’re not hopeless. The Higher Mind is still a strong presence in their head, and if dissenting evidence is strong enough, the Sports Fan will grudgingly change their mind. Underneath all the haze of cognitive bias, Sports Fans still care most about finding the truth.

Drift down any further, though, and you cross the Ladder midpoint and become a different kind of thinker entirely. Down on the low rungs, the Primitive Mind has the edge in the tug-of-war. Whether you’ll admit it or not (you won’t), the desire to feel right, and appear right, has overcome your desire to be right. And when some other motivation surpasses your drive for truth, you leave the world of intellectual integrity and enter a new place.

Unconvinceable5 Land is a world of green grass, blue sky, and a bunch of people whose beliefs can’t be swayed by any amount of evidence. When you end up here, it means you’ve become a disciple of some line of thinking—a religion, a political ideology, the dogma of a subculture. Either way, your intellectual integrity has taken a backseat to intellectual loyalty.

As we descend into Unconvinceable Land, we hit the Ladder’s third rung.

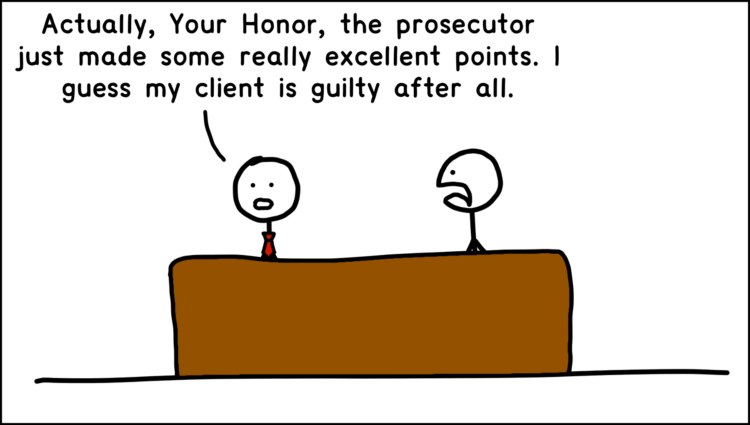

Rung 3: Thinking like an Attorney

An Attorney and a Sports Fan have things in common. They’re both conflicted between the intellectual values of truth and confirmation. The critical difference is which value, deep down, they hold more sacred. A Sports Fan wants to win, but when pushed, cares most about truth. But it’s as if an Attorney’s job is to win, and nothing can alter their allegiance.

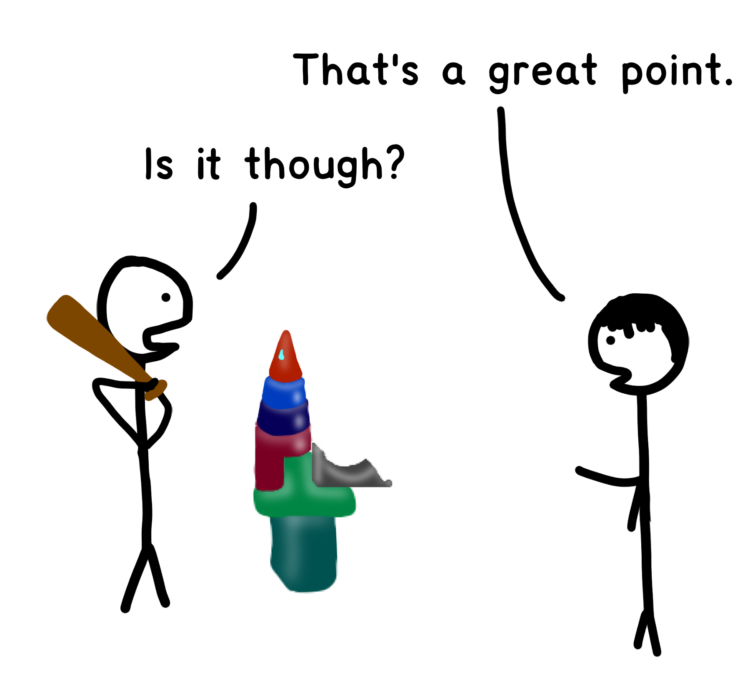

Because would this be a good attorney?

No, it wouldn’t. An Attorney is on a team, period.

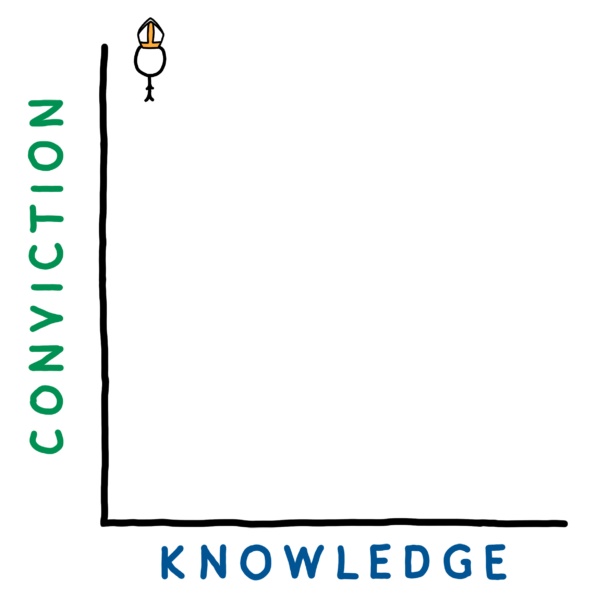

When you’re thinking like an Attorney, you don’t start at Point A at all. You start at Point B. The client is not guilty. Now let’s figure out why.

From there you’ll go through your due diligence, cherry-picking evidence and piecing together an argument that leads right where you want it to.

This isn’t a criticism of real-world attorneys. In an actual courtroom, the attorney’s way of thinking makes sense—because each attorney’s case is only half of what will be presented to the jury. Real-world attorneys know that the best way for the system to yield truth is for them to make the best possible case for one side of the story. But on our Ladder, the cognitive Attorney’s head is like a courtroom with only one side represented—in other words, a corrupt courtroom where the ruling is predetermined.

The Attorney treats their preferred beliefs not like an experiment that can be revised, or even a favorite sports team, but like a client. Motivated reasoning becomes obligated reasoning, and the gathering, evaluating, and puzzling processes function like law associates whose only job is to help build the case for Point B.

If someone really wants to believe something—that the Earth is flat, that 9/11 was orchestrated by Americans, that the CIA is after them—the human brain will find a way to make that belief seem perfectly clear and irrefutable. For the Attorney, the hypothesis formation stage is really a belief-strengthening process. They inevitably end up with the same viewpoints they started with, now beefed up with a refreshed set of facts and arguments that remind them just how right they are.

In the hypothesis testing phase, the Attorney’s refusal to genuinely listen to a dissenter, combined with a bag of logical fallacy tricks and their strong sense of conviction, ensures that they’re an absolutely infuriating person to argue with. The Attorney’s opponents will feel like they’re arguing with a brick wall, and by the end, it’ll be clear that nothing they could have said—nothing whatsoever—would have made the Attorney say, “Hmm that’s a good point. I need to think about that. Maybe I’m wrong.”

The result of thinking like an Attorney is that your brain’s incredible ability to learn new things is mostly shut down. Even worse, your determination to confirm your existing beliefs leaves you confident about a bunch of things that aren’t true. Your efforts only make you more delusional. If there’s anything you can say about Attorney thinking, it’s that it at least acknowledges the concept of the knowledge-building process. When you’re thinking like an Attorney, you’re unconvinceable, but you’re not that big an internal shift away from high-rung thinking. From somewhere in the periphery of your mind, the voice of the Higher Mind still carries some weight. And if you can learn to listen to it and value it, maybe things can change.

But sometimes, there are beliefs that your Primitive Mind holds so dear that your Higher Mind has no influence at all over how you think about them. When dealing with these topics, ideas and people feel inseparable and changing your mind feels like an existential threat. You’re on the bottom rung.

Rung 4: Thinking like a Zealot

Imagine you’ve just had your first baby. Super exciting, right?

And every day when you look at your baby, you can’t believe how cute it is.

Just like no parent has to research whether their baby is lovable, the Zealot doesn’t have to go from A to B to know their viewpoints are correct—they just know they are. With 100% conviction.6

Likewise with skepticism. If someone told you your actual baby was super cute, you wouldn’t assess their credibility, you’d be in automatic full agreement. And if someone told you your baby was an asshole, you wouldn’t consider their opinion, you’d just think they were a terrible person.

That’s why the Zealot’s flip-flop goes from one extreme to the other, with no in between.

When Zealots argue, things can quickly get heated, because for someone who identifies with their ideas, a challenge to those ideas feels like an insult. It feels personally invalidating. A punch landed on a Zealot’s idea is a punch landed on their baby.

When the Primitive Mind is overactive in our heads, it turns us into crazy people. On top of making us think our ideas are babies, it shows us a distorted view of ourselves.

And it shows us a distorted view of the world. While the Scientist’s clear mind sees a foggy world, full of complexity and nuance and messiness, the Zealot’s foggy mind shows them a clear, simple world, full of crisp lines and black-and-white distinctions. When you’re thinking like a Zealot, you end up in a totally alternative reality, feeling like you’re an omniscient being in total possession of the truth.

High-rung thinking, low-rung thinking

The four thinking rungs are all distinct, but they fall into two broad categories: high-rung thinking (Scientist and Sports Fan) and low-rung thinking (Attorney and Zealot).

High-rung thinking is independent thinking, leaving you free to revise your ideas or even discard them altogether. But when there’s no amount of evidence that will change your mind about something, it means that idea is your boss. On the low rungs, you’re working to dutifully serve your ideas, not the other way around.

High-rung thinking is productive thinking. The humility of the high-rung mindset makes your mind a permeable filter that absorbs life experience and converts it into knowledge and wisdom. On the other hand, the arrogance of low-rung thinking makes your mind a rubber shell that life experience bounces off of. One begets learning, the other ignorance.

We all spend time on the low rungs,7 and when we’re thinking this way, we don’t realize we’re doing it. We believe our conviction has been hard-earned. We believe our viewpoints are original and based on knowledge. Because as the Primitive Mind’s influence grows in our heads, so does the fog that clouds our consciousness. This is how low-rung thinking persists.

Each of us is a work in progress. We’ll never rid our lives of low-rung thinking, but the more we evolve psychologically, the more time we spend thinking from the high rungs and the less time we spend down below. Improving this ratio is a good intellectual goal for all of us.

But this is just the beginning of our journey. Because individual thinking is the center of a much larger picture. We’re social creatures, and as with most things, the way we think is often intertwined with the people we surround ourselves with.

Intellectual Cultures

We can define “culture” as the unwritten rules regarding “how we do things here.”

Every human environment—from two-person couples to 20-person classrooms to 20,000-person companies—is embedded with its own culture. We can visualize a group’s culture as a kind of gas cloud that fills the room when the group is together.

A group’s culture influences its members with a social incentive system. Those who play by the culture’s rules are rewarded with acceptance, respect, and praise, while violating the culture will result in penalties like ridicule, shame, and ostracism.

Human society is a rich tapestry of overlapping and sometimes sharply contradictory cultures, and each of us lives at our own unique cultural intersection.

Someone working at a tech startup in the Bay Area is simultaneously living within the global Western community, the American community, the West Coast community, the San Francisco community, the tech industry community, the startup community, the community of their workplace, the community of their college alumni, the community of their extended family, the community of their group of friends, and a few other bizarre SF-y situations. Going against the current of all these larger communities combined tends to be easier than violating the unwritten rules of our most intimate micro-cultures, made up of our immediate family, closest friends, and romantic relationships.

Living simultaneously in multiple cultures is part of what makes being a human tricky. Do we keep our individual inner values to ourselves and just do our best to match our external behavior to whatever culture we’re currently in a room with? Or do we stay loyal to one particular culture and live by those rules everywhere, even at our social or professional peril? Do we navigate our lives to seek out external cultures that match our own values and minimize friction? Or do we surround ourselves with a range of conflicting cultures to put some pressure on our inner minds to learn and grow? Whether you consciously realize it or not, you’re making these decisions all the time.

Culture can encompass many aspects of interaction. A group of friends, for example, has a way they do birthdays, a way they do emojis, a way they do talking behind each other’s backs, a way they do conflict, and so on. For our purposes, we’ll again limit our discussion to how all this pertains to thinking, or a group’s intellectual culture—“how we do things here” as it relates to the expression of ideas.

In the same way the two aspects of your mind compete for control over how you think, a similar struggle happens on a larger scale over how the group thinks. Higher Minds can band together with other Higher Minds and form a kind of coalition, and a group’s collective Primitive Minds can do the same thing. One coalition gaining control over the culture is like home-field advantage in sports—a hard one for the “away team” to overcome.

Let’s first explore what it looks like when the Higher Minds have the reins of a group’s intellectual culture.

Idea Labs

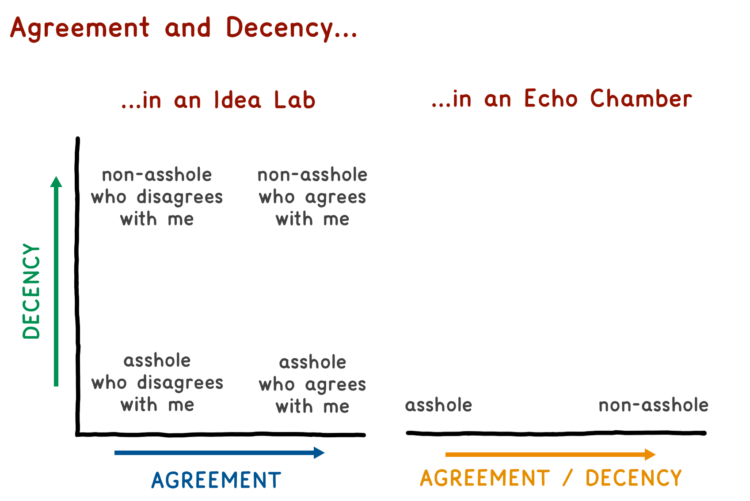

Most of us know the term “Echo Chamber,” and we’ll get to that in a minute—but we sorely lack a term for the opposite of an Echo Chamber. When the rules of a group’s intellectual culture mirror the values of high-rung thinking, the group is what I call an Idea Lab.

An Idea Lab is an environment of collaborative high-rung thinking. People in an Idea Lab see one another as experimenters and their ideas as experiments. Idea Labs value independent thinking and viewpoint diversity. This combination leads to the richest and most interesting conversations and maximizes the scope of group discussions.

Idea Labs place a high regard on humility, and saying “I don’t know” usually wins trust and respect. When someone who often says “I don’t know” does express conviction about a viewpoint, it really means something, and others will take it to heart without too much skepticism needed—which saves the listener time and effort. Likewise, unearned conviction is a major no-no in an Idea Lab. So someone with a reputation for bias or arrogance or dishonesty will be met with a high degree of skepticism, no matter how much conviction they express.

Idea Labs also love arguments. Ideas in an Idea Lab are treated like hypotheses, which means people are always looking for opportunities to test what they’ve been thinking about. Idea Labs are the perfect boxing ring for that testing.

Sometimes high-rung thinkers engage in debate, defending an idea, strenuously arguing for its validity.

Other times, they’ll engage in dialectic, joining the dissenter in examining their idea.

They may even try flipping sides and playing devil’s advocate, debating against someone who agrees with them in order to see their idea through another lens.

People in an Idea Lab don’t usually take arguments personally because Idea Lab culture is built around the core notion that people and ideas are separate things. People are meant to be respected, ideas are meant to be batted around and picked apart.

Perhaps most importantly, an Idea Lab helps its members stay high up on the Ladder. No one thinks like pure top-rung Scientists all the time. More often, after a brief stint on the top rung during an especially lucid and humble period, we start to like the new epiphanies we gleaned up there a little too much, and we quickly drop down to the Sports Fan rung. And that’s okay. It might even be optimal to be a little over-confident in our intellectual lives. Rooting for our ideas—a new philosophy, a new lifestyle choice, a new business strategy—allows us to really give them a try, somewhat liberated from the constant “but are we really sure about this?” nag from the Higher Mind.

The Sports Fan rung alone isn’t a problem. The problem is that inviting some bias into the equation is a bit like closing your eyes for just another minute after you’ve shut your alarm off for good—it’s riskier than it feels. Getting a little attached to an idea is a small step away from drifting unconsciously into Unconvinceable Land and the oblivion of the rungs down below. We’re pre-programmed to be low-rung thinkers, so our intellects are always fighting against gravity.

This is why Idea Lab culture is so important. It’s a support network for flawed thinkers to help each other stay up on the high rungs.

The social pressure helps. If high-rung thinking is what all the cool kids are doing, you’re more likely to think that way.

And the intellectual pressure helps. In an Idea Lab—where people don’t hesitate to tell you when you’re wrong or biased or hypocritical or gullible—humility and self-awareness are inflicted upon you. Whenever you get a little too overconfident, Idea Lab culture pulls you back to an honest level of conviction.

All these forces combine to make an Idea Lab a big magnet on top of the Ladder that pulls upward on the psyches of people immersed in it.

But what happens when a group’s Primitive Minds can run things their way?

Echo Chambers

An Echo Chamber is what happens when a group’s intellectual culture slips down to the low rungs: collaborative low-rung thinking.

While Idea Labs are cultures of critical thinking and debate, Echo Chambers are cultures of groupthink and conformity. Because while Idea Labs are devoted to a kind of thinking, Echo Chambers are devoted to a set of beliefs the culture deems to be sacred.

A culture that treats ideas like sacred objects incentivizes entirely different behavior than the Idea Lab. In an Echo Chamber, falling in line with the rest of the group is socially rewarded. It’s a common activity to talk about how obviously correct the sacred ideas are—it’s how you express your allegiance to the community and prove your own intellectual and moral worth.

Humility is looked down upon in an Echo Chamber, where saying “I don’t know” just makes you sound ignorant and changing your mind makes you seem wishy-washy. And conviction, used sparingly in an Idea Lab, is social currency in an Echo Chamber. The more conviction you speak with, the more knowledgeable, intelligent, and righteous you seem.

Idea Labs can simultaneously respect a person and disrespect the person’s ideas. But Echo Chambers equate a person’s ideas with their identity, so respecting a person and respecting their ideas are one and the same. Disagreeing with someone in an Echo Chamber is seen not as intellectual exploration but as rudeness, making an argument about ideas indistinguishable from a fight.

This moral component provides Echo Chambers with a powerful tool for cultural law enforcement: taboo. Those who challenge the sacred ideas are seen not just as wrong but as bad people. As such, violators are slapped with the social fines of status reduction or reputation damage, the social jail time of ostracism, and even the social death penalty of permanent excommunication. Express the wrong opinion on God, abortion, patriotism, immigration, race, or capitalism in the wrong group and you may be met with an explosive negative reaction. Echo Chambers are places where you must watch what you say.

An Echo Chamber can be the product of a bunch of people who all hold certain ideas to be sacred. Other times, it can be the product of one or a few “intellectual bullies” who everyone else is scared to defy. Even in the smallest group—a married couple, say—if one person knows that it’s never worth the fight to challenge their spouse’s strongly held viewpoints, the spouse is effectively imposing Echo Chamber culture on the marriage.

Intellectual cultures have a major impact on the individuals within them. While Idea Lab culture encourages intellectual and moral growth, Echo Chamber culture discourages new ideas, curbs intellectual innovation, and removes knowledge-acquisition tools like debate—all of which repress growth.

Spending too much time in an Echo Chamber makes people feel less humble and more sure of themselves, all while limiting actual learning and causing thinking skills to atrophy.

In a broader sense, both primitive-mindedness and high-mindedness tend to be contagious. While Idea Lab culture is a support group that helps keep people’s minds up on the high rungs, Echo Chamber culture pumps out Primitive Mind pheromones and exerts a general downward pull on the psyches of its members.

Given the obvious benefits of Idea Lab culture, it’s odd that we ever go for the alternative. We eat Skittles because our Primitive Minds are programmed to want sugary, calorie-dense food. But why do our Primitive Minds want us to build Echo Chambers?

Let’s zoom out further…

This was an excerpt from What’s Our Problem? A Self-Help Book for Societies. To read the whole book, get the ebook or audiobook on all platforms here or buy the Wait But Why version to keep reading here.

More things:

The story behind the making of this book

According to biologist Richard Dawkins, future geneticists may be able to look at an individual’s genome and “read off a description of the worlds in which the ancestors of that animal lived.” (Harris 2019)↩

This book took me six years, which is at least two years longer than it should have taken.↩

The Idea Spectrum is a pretty rigid tool—it’s linear and one-dimensional, and most worlds of thought are more complex and involve multiple dimensions simultaneously. But most of these worlds can also be roughly explored on a simple Idea Spectrum, and for our purposes, oversimplifying areas of thought to single spectrums can help us see what’s going on.↩

Of course, real-world scientists often do not think like Scientists—something we’ll talk about later in the book. In this book, when we talk about capital-s Scientists, we’re talking about a certain thinking process: what Carl Sagan meant when he said, “Science is a way of thinking much more than it is a body of knowledge.” (Broca’s Brain, 15)↩

My copyeditor tried to change this to “inconvinceable” because unconvinceable isn’t a word. But I like “Unconvinceable Land” more, so you’ll just have to deal with it.↩

Thinking like a Zealot leaves someone low on the knowledge axis because it means their knowledge-acquisition mechanism isn’t working correctly. It doesn’t mean they’re wrong about every opinion—just that when they’re right, it’s a credit to luck more than their own reasoning abilities.↩

In case you’re thinking, “I’m a really smart person, so I’m safe from the low rungs,” Adam Grant has bad news for you: “Research reveals that the higher you score on an IQ test, the more likely you are to fall for stereotypes, because you’re faster at recognizing patterns. And recent experiments suggest that the smarter you are, the more you might struggle to update your beliefs.” (Grant 2021, 24)↩

Two professors of evolutionary sciences developed a model to answer the question, “Under what conditions might a tendency for performing behaviours that incorrectly assign cause and effect be adaptive from an individual fitness point of view?” Though the simple model wasn’t designed to explain complex human behavior, it explains why evolution might favor superstitious behavior: If there’s significant reward for a correct guess (“e.g., not being eaten by a predator”), it’s worth acting on possibly incorrect guesses. Kevin R Foster and Hanna Kokko, “The Evolution of Superstitious and Superstition-like Behaviour,” Proceedings of the Royal Society B: Biological Sciences 276, no. 1654 (January 7, 2009): 31–37, https://doi.org/10.1098/rspb.2008.0981.↩

Sent-ts’an, Buddhist Texts Through the Ages, ed. Edward Conze, trans. Arthur Waley (New York: Philosophical Library, 1954), 296.↩

Credit to Jonathan Haidt for this wording: “We don’t look out at the world and say, ‘Where’s the weight of the evidence?’ We start with an original supposition and we say, ‘Can I believe it?’ If I want to believe something, I ask: Can I believe it? Can I find the justification? But if I don’t want to believe it, I say: Must I believe it? Am I forced to believe it? Or can I escape?” GOACTA, Free to Teach, Free to Learn, YouTube, Athena Roundtable, 2016, sec. 8:18–8:41, https://youtu.be/ntN4_iRp4UE.↩

Researchers at Princeton conducted two studies in which participants reacted to a university president’s beliefs on affirmative action. They found that students were more likely to perceive the president as biased if they personally disagreed with her beliefs. Kathleen A. Kennedy and Emily Pronin, “When Disagreement Gets Ugly: Perceptions of Bias and the Escalation of Conflict,” Personality and Social Psychology Bulletin 34, no. 6 (June 2008): 835–37, https://doi.org/10.1177/0146167208315158.↩